There are multiple ways you can extract or scrape the content of a website using only Google Sheets. To do so, multiple formulas are at your disposal. In this article, I will go over the three most widely used formulas that you can use to scrape the content of websites without coding knowledge required. Let’s get to it.

Import Data from Tables in Website to Google Sheets

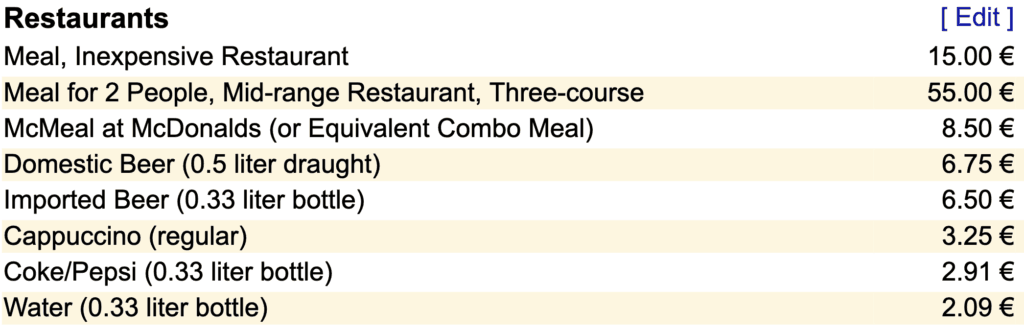

One of the most common ways of displaying large chunks of data is by using tables. HTML tables are dead easy to code and generate so people tend to use it very often. You can find it on Wikipedia but also on websites such as Expatistan or Numbeo (Screenshot below)

As you can see from this image, there is clearly two columns: One for the factors and one for the values.

Looking at the code also shows a clear HTML table, easy to read, therefore easy to scrape using Google Sheets…

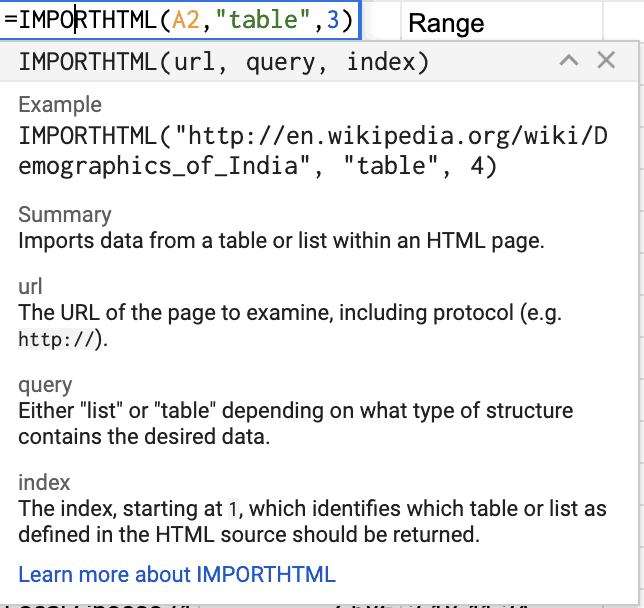

To get the table from a website to Google Sheets, we need to take a deeper look at what the formula we are going to be using, =IMPORTHTML, requires.

Looking at the formula, it requires a URL, the kind of data we’re looking to extract (Either a list or a table) and an index.

Looking at our Numbeo page (https://www.numbeo.com/cost-of-living/in/Paris), if you inspect the code and look for how many <table> elements there is, you can notice that the table that contains the data depicted two screenshots above is in the third table present in the source code.

Knowing that, we can start crafting our formula like this :

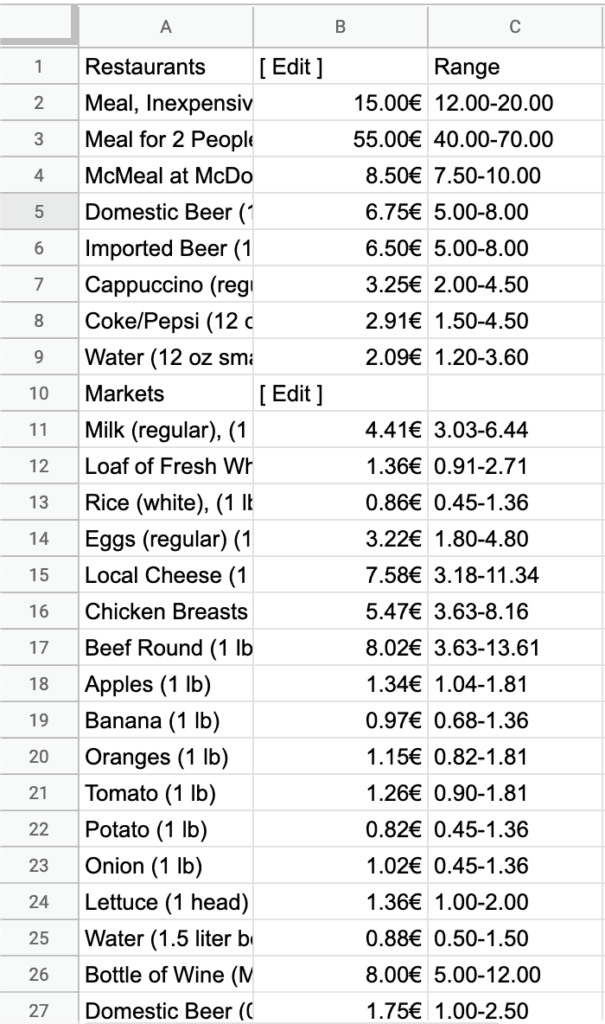

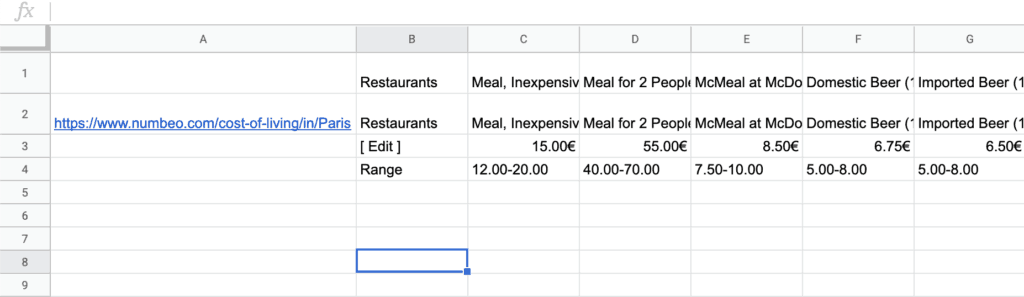

And here’s the result:

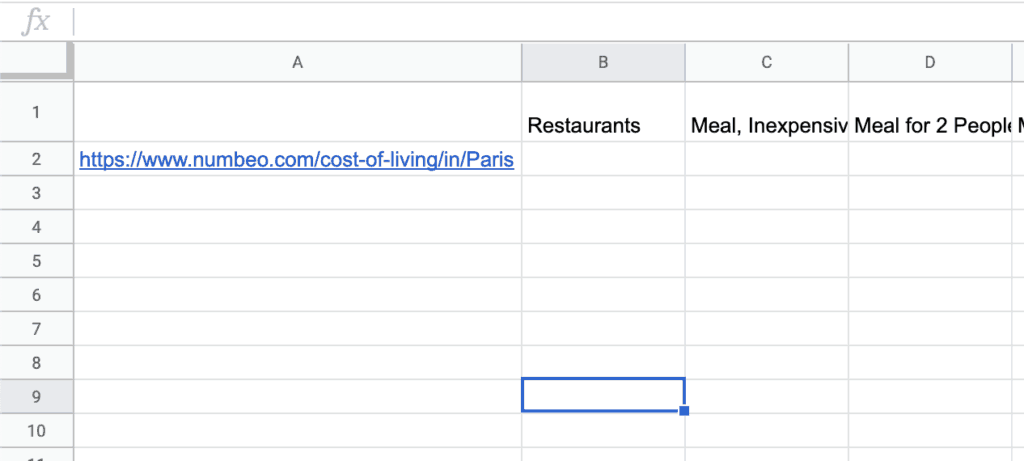

Now, there is a nice use case for Numbeo. You could for instance automatically extracts the full table’s data of multiple pages in the same spreadsheet.

To do this, let’s create a column A dedicated to URLs and the row 1 to the factors names.

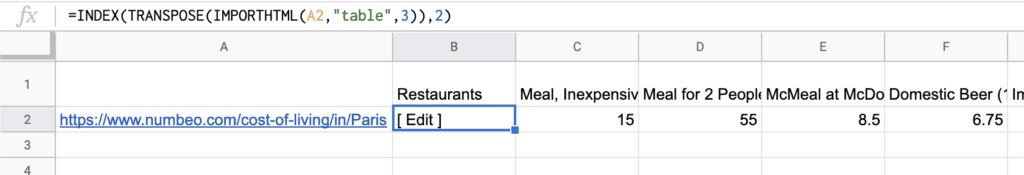

Now, instead of just using the IMPORTHTML formula as we did before, we need to tweak its output using other formulas, namely TRANSPOSE and INDEX. The output generated by the IMPORTHTML formula alone is a table that is three columns wide and 65 rows long. The format of our table is made so that the factors should be the columns and the headings should become rows. The formula =TRANSPOSE does just that.

Despite being in the right direction, this formula is still unusable for our purpose. The goal now is to make the formula only outputs the second row here. To do so, the formula INDEX is going to come handy.

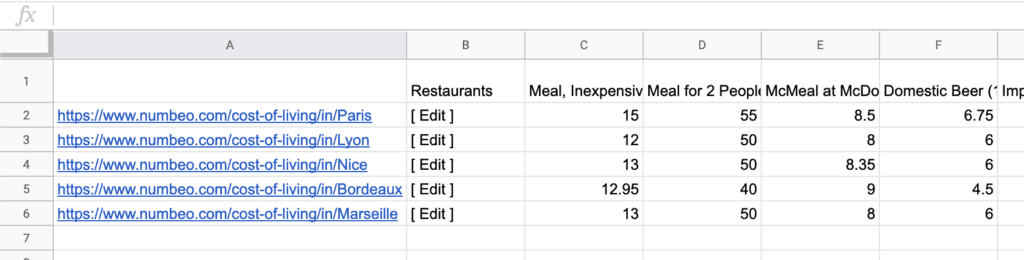

And that’s it. Now we can add more cities in the URL column, drag down the formula and watch the data unfolds.

Import Data from Any Website to Google Sheets

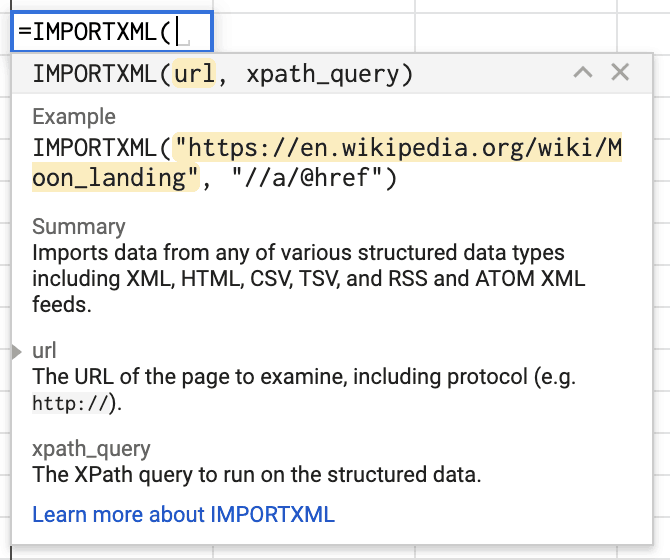

Now, if the website you’re looking to scrape with Google Sheets does not consist of tables and lists but raw code, you may need a formula a bit more powerful to get the job done. Meet =IMPORTXML.

Like IMPORTHTML, the recurring element here is the URL. The Xpath Query though is a concept that is trickier to grasp. The way I explained it in my article that teaches how to monitor Youtube channels in Google Sheets is as follows:

To fill the Xpath Query of the IMPORTXML function, we need three elements from the information we’re trying to pull out of the website:

- The HTML Tag where the information resides. So whether it’s an <a>, a <span> or something else…

- Whether the identifier is an “id” or a “class”

- The name of the “id” or the “class”. If you have the choice between an “id” or a “class”, always refer to the “id” as it’s more unique and less prone to mistakes.

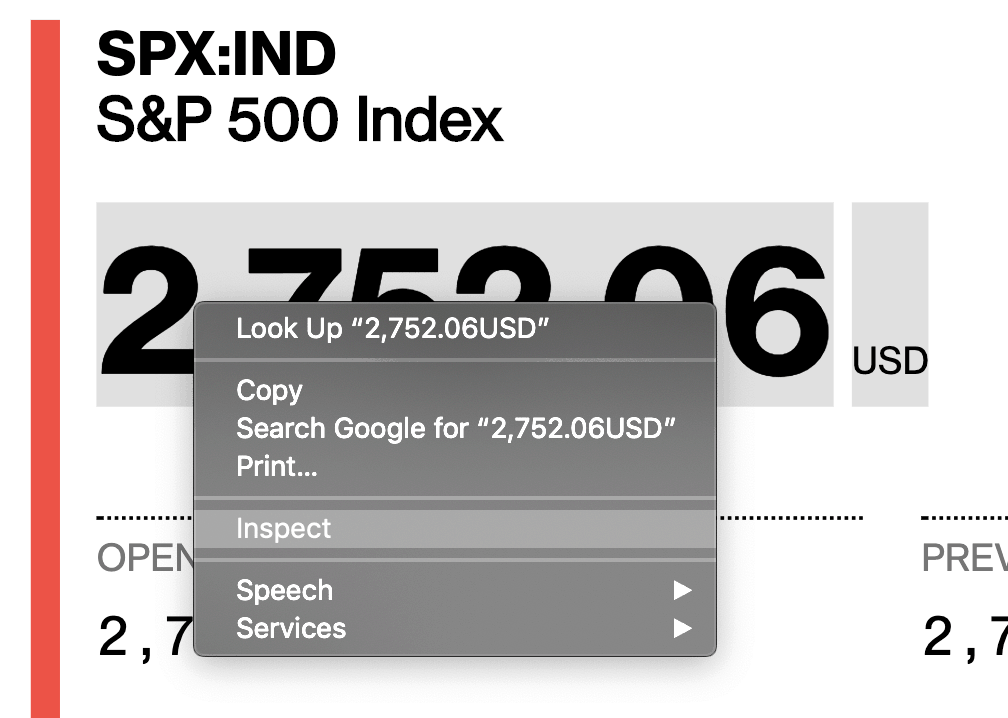

For instance, let’s get on Bloomberg’s Stocks website on this page (https://www.bloomberg.com/quote/SPX:IND) and inspect whatever this big number in bold means:

Leading to this:

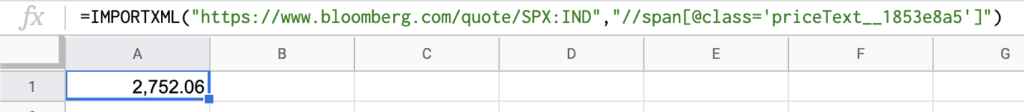

Based on this result, this is how you would use the IMPORTXML formula:

And boom, that’s it. If you follow the same logic that we used to automatically generate results for the Numbeo spreadsheet, no doubt you can use the same tweakings to scrape multiple pages of the same website all in one spreadsheet.

Import JSON Content from any website to Google Sheets

If you’re encountering difficulties with IMPORTXML, it is sometimes useful to look into how we could import the content of JSON sources into Google Sheets.

Section in Progress.

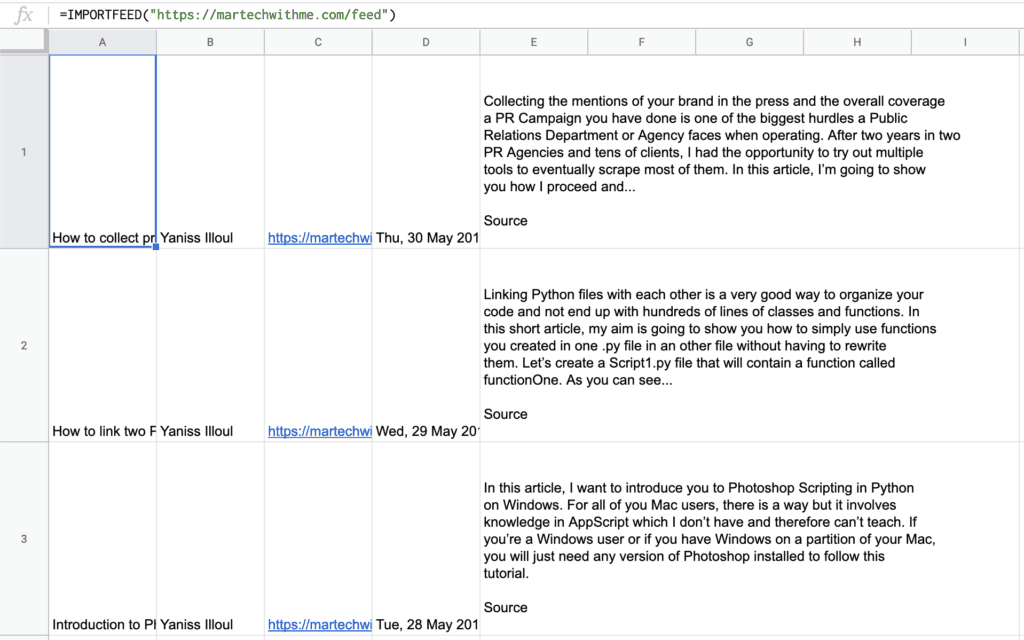

Bonus: Import RSS Feeds in Google Sheets

A neat feature that Google Sheets allows is to import RSS feeds inside a spreadsheet. I haven’t found a real use case for this formula but maybe you peeps will think about something. Anyway that’s how it looks when used with my own RSS feed:

Conclusion

And that’s pretty much it. I hope this article has been enlightening for you. If you have any question on how to use one of these formulas or are encountering any issues, don’t hesitate to leave a comment in the appropriate section down below and I will get back to you and try to find a solution.

As always, thank you for taking the time to scroll through my article and I will see you again soon.

6 Responses

Like the googlefinance function for stock prices, I am trying to use the importxml function to input Zacks ranks to show updated ranks on my spreadsheet. Furthermore, I would like to drag this function through 1,000’s of other cells and being all stocks have their own symbol, how do you recommend I drag this function across thousands of cells?

Thank you in advance

I tried the importxml function for the bloomberg example, =importxml(“https://www.bloomberg.com/quote/SPX:IND”,”//span[@class=’priceText__06f600fa3e’]”)

google sheets gives me “Error: Imported content is empty”

Any idea why I’m getting this error?

Hello Bryan, thanks for your comment.

So I do have the reason why it’s not working but not the solution unfortunately…

If you use this formula, “=importxml(“https://www.bloomberg.com/quote/SPX:IND”,”//p”)”, you will be able to scrape all of the paragraphs from the webpage in the first argument. And this is where I noticed that Bloomberg has probably installed some anti-scraping feature on their website as this is what Google’s robots see when you use the IMPORTXML formula to retrieve the

from the page.

Unfortunately, it looks like getting data from Bloomberg.com with the IMPORTXML formula is not possible anymore. If you are interested in getting stock prices in Google Sheets, I would suggest looking into other websites that offer the same kind of data as Bloomberg.com does and try to scrape them instead. Hopefully there is one that is not trying to block robots so that you can use Google Sheets to retrieve the data :).

Another thing you could do is use the Google Finance formula. It might be a bit more cumbersome to use but I found it worked quite well. Here is an example on how to get the current stock price for Apple in USD: =GOOGLEFINANCE(“NASDAQ:AAPL”, “price”).

I hope that helps.

Best,

Yaniss

Hello, hope you’re doing good.

I wonder what would be the syntax to pull the first row of data for the CMEgroup site and also the date for the last update. I tried with the syntax suggested above, but it didn’t seem to work.

https://www.cmegroup.com/markets/equities/sp/e-mini-sandp500.settlements.html

As I am posting this, on this page, we currently see that the last update was “Last Updated 04 Feb 2022 06:00:00 PM CT”.

And here is the data for the first row.

MAR 22 4518.00 4532.50 4438.50 4485.50 +23.50 4492.50 1,884,479 2,214,200

Thank you.